Cisco faces a growing security crisis after confirming that Chinese-linked hackers are exploiting a critical zero-day flaw in some of its most widely deployed email security products, enabling full takeover of vulnerable devices with no patch currently available. The vulnerability, tracked as CVE-2025-20393, is an improper input validation flaw in Cisco’s AsyncOS software that powers Cisco Secure Email Gateway and Cisco Secure Email and Web Manager appliances. Under specific conditions, it allows attackers to execute commands with unrestricted privileges, install persistent backdoors and effectively seize total control of affected systems.

According to the cyberscoop, AI tools have transformed the information ecosystem into a strategic weapon. Deepfakes, synthetic news anchors, and coordinated bot networks enable influence operations on a scale once unimaginable. What was once the domain of state-backed information units has become cheap, fast, and easy to replicate. Whoever controls the narrative can justify escalation, legitimize claims, and shape global perceptions without firing a single shot.

In July 2024, a deepfake audio clip of President Ferdinand Marcos Jr allegedly ordering an attack on China went viral across Philippine social media, igniting fear and confusion before authorities verified it as fake. The episode exposed a new vulnerability: AI can blur the line between truth and fiction so effectively that public reactions arrive long before official clarifications. The result is not just disinformation but potential diplomatic destabilization.

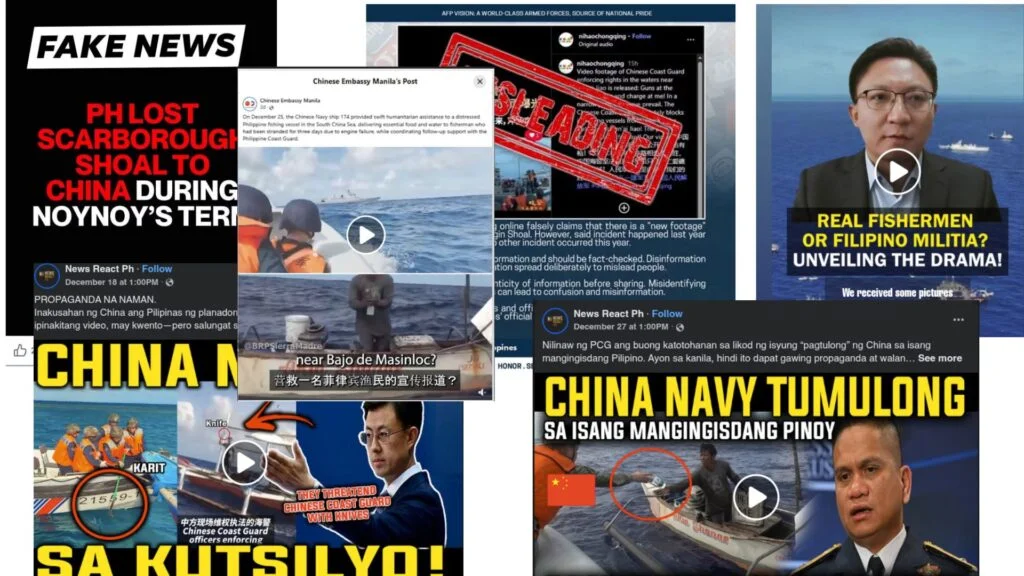

Behind the scenes, a complex web of propaganda networks thrives. Reports by PressOne.PH, Graphika, and Agence France-Presse reveal monetized, AI-powered content farms producing pro-China narratives or inflammatory war scenarios under the guise of “news.” Fake accounts with AI-generated profile photos amplify these posts, making propaganda appear organic. Each misleading video or story often earning between US$20 and US$70 in ad revenue trades in outrage, not truth. Conflict, in the algorithmic age, has become content.

This digital conflict profits from public vulnerability. A study by the Philippine Presidential Communications Office found that over half of Filipinos struggle to identify fake news, and nine in ten encounter false information online. Such fragility makes the public fertile ground for manipulation. AI-generated propaganda doesn’t just misinform it can manufacture consent for war, stoking nationalism and legitimizing calls for escalation despite the country’s limited military readiness.

China’s use of “cognitive warfare” adds another layer. AI-enhanced spokespersons, algorithmically tuned speech patterns, and emotionally charged narratives subtly shape perceptions rather than arguments. As cognitive warfare spreads, democratic deliberation risks being replaced by emotional conditioning a global concern that reaches far beyond the South China Sea.

Efforts to fight back are uneven. The Philippines is drafting AI regulation bills aimed at curbing deepfakes before the 2025 elections, but cross-border enforcement remains limited. China mandates labelling of AI-generated content, yet its regulations enforce compliance with state narratives rather than transparency. Meanwhile, platforms like Meta and X have rolled back professional fact checking, leaving users to navigate a flood of deceptive content with little institutional support.

Even the media’s countermeasures pose ethical dilemmas. Manila’s “transparency initiative,” where journalists join naval missions to the disputed waters, promotes real-time reporting but risks blurring lines between state messaging and independent journalism. In information warfare, access can come at the price of autonomy.

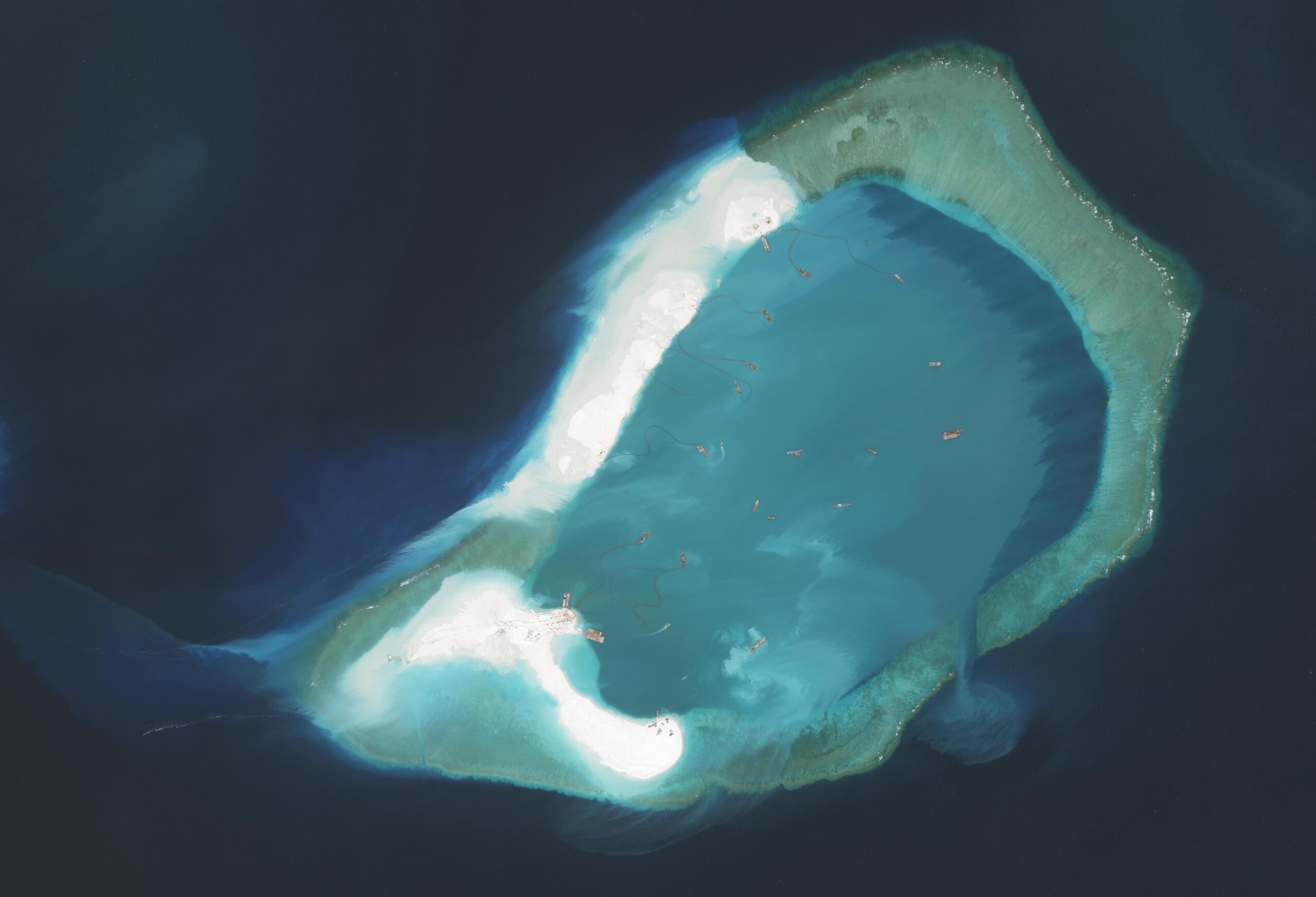

The South China Sea has become a test case for the weaponization of AI in modern geopolitics. What begins here could soon echo in other contested zones the Taiwan Strait, the Arctic, or Africa’s resource corridors. The implications are global: one-third of world trade sails through these waters. If AI-propelled misinformation inflames regional tensions or distorts policy decisions, the consequences will ripple through supply chains, alliances, and the very norms of international law.

To counter this tide, stakeholders must act collectively. Regulators need enforceable international norms on AI-generated content. Platforms must invest in deepfake detection and transparent algorithms. Civil society must expand fact checking networks and media literacy programs that treat misinformation as a national security threat.

The South China Sea’s digital warfront shows the new shape of conflict one fought with algorithms, not artillery. The battle for territory has merged with the battle for truth, and the world is watching to see who wins.

Leave a Reply